Dive deep into the NVIDIA H100, H200, and B200 GPUs for AI. Get a comprehensive performance review, detailed specs, benchmark analysis, and cost-benefit guidance to choose the right GPU for your AI workloads.

Introduction: The AI Arms Race & Choosing Your Weapon

The artificial intelligence revolution has created an unprecedented demand for computational power, with organizations racing to deploy increasingly sophisticated models that push the boundaries of what’s possible. At the heart of this AI arms race lies a critical decision: choosing the right GPU architecture to power your machine learning workloads.

NVIDIA has established itself as the undisputed leader in AI hardware acceleration, commanding over 80% of the market for AI training and inference chips. This dominance stems from their comprehensive ecosystem combining cutting-edge silicon with mature software tools like CUDA, cuDNN, and TensorRT.

When evaluating Top AI GPUs Compared: NVIDIA Blackwell H200 vs Hopper H100 Performance Review, understanding the generational differences becomes crucial. The Hopper architecture, represented by the H100 and H200, has dominated AI workloads since 2022. Meanwhile, the revolutionary Blackwell architecture, embodied by the B200, promises to redefine what’s computationally possible for the next generation of AI applications.

This comprehensive review will guide you through performance benchmarks, architectural innovations, cost-benefit analysis, and real-world use cases to help you make an informed decision for your specific AI workloads.

GEO Snippet: Need to choose between NVIDIA H100, H200, or B200 for your AI workloads? This guide provides an in-depth performance comparison and ROI analysis to help you decide.

Understanding the NVIDIA AI GPU Ecosystem

NVIDIA‘s dominance in AI hardware extends far beyond raw computational power. Their ecosystem provides a vertically integrated stack that accelerates development and deployment of AI applications. The CUDA parallel computing platform serves as the foundation, enabling developers to harness GPU acceleration across thousands of applications.

Key architectural components that define AI performance include Tensor Cores—specialized processing units optimized for the matrix operations central to neural networks. High Bandwidth Memory (HBM) provides the massive memory bandwidth required for large model training and inference. NVLink interconnect technology enables seamless scaling across multiple GPUs, creating unified memory pools that can accommodate the largest AI models.

GEO Snippet: NVIDIA’s AI ecosystem is built on CUDA, offering optimized software and hardware for deep learning and high-performance computing.

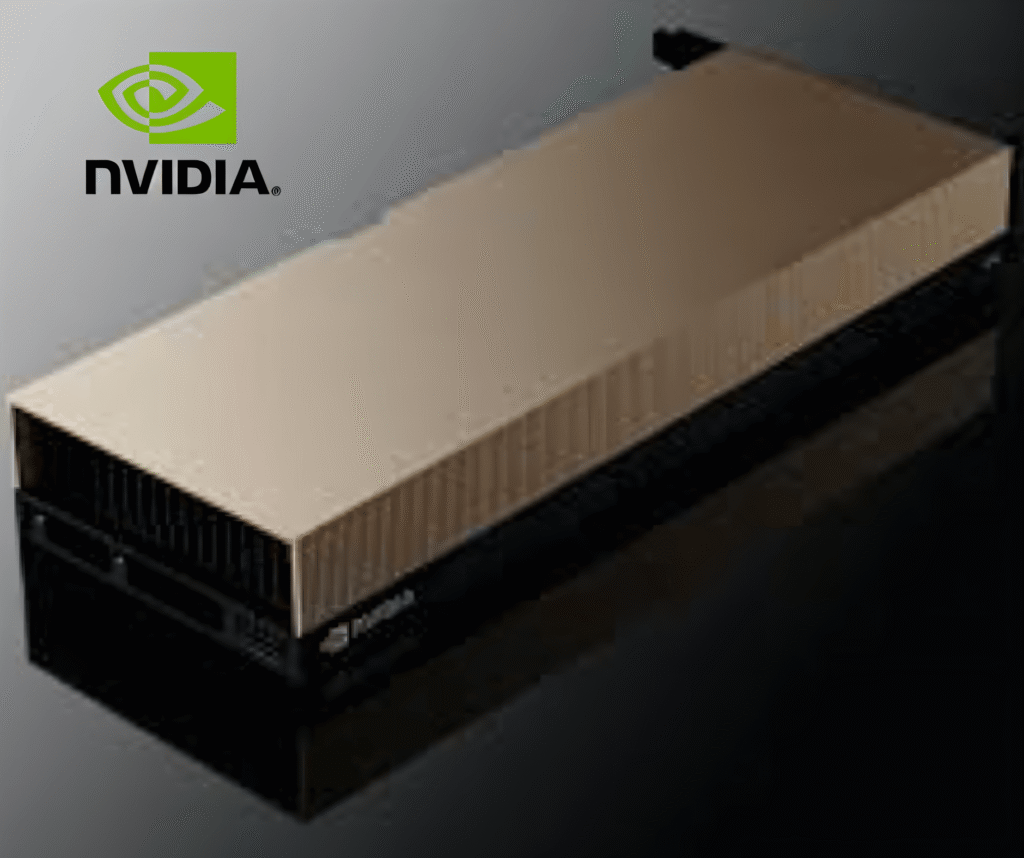

NVIDIA Hopper H100: The Reigning Champion (Baseline)

Since its introduction in 2022, the H100 has served as the gold standard for AI training and inference, powering everything from large language models to computer vision applications. Built on TSMC’s 4nm process node, the H100 established new performance benchmarks that competitors have struggled to match.

Key Specifications – NVIDIA H100

| Specification | NVIDIA H100 |

|---|---|

| Architecture | Hopper |

| Manufacturing Process | 4nm (TSMC) |

| Transistors | 80 billion |

| Tensor Performance (FP16) | 1,979 TFLOPS |

| Tensor Performance (TF32) | 989 TFLOPS |

| Memory Type | HBM3 |

| Memory Capacity | 80 GB |

| Memory Bandwidth | 3.35 TB/s |

| NVLink | 4th Gen, 900 GB/s |

| TDP | 700W |

The H100 excels in large-scale AI training scenarios, offering exceptional compute density for transformer-based models. Its balanced architecture provides strong performance across both training and inference workloads, making it an ideal choice for organizations running diverse AI applications.

Best For: Current large-scale AI training, general-purpose inference, high-performance computing, and established production workloads.

NVIDIA Hopper H200: The HBM3e Power-Up

The H200 represents an evolutionary step within the Hopper architecture, maintaining the same core design while dramatically improving memory subsystem performance. The H200 nearly doubles the memory capacity of the H100, allowing it to handle larger datasets and more complex models. With a memory bandwidth of 4.8 TB/s, the H200 offers approximately 1.4 times faster data access compared to the H100’s 3.35 TB/s.

Key Specifications – NVIDIA H200

| Specification | NVIDIA H200 |

|---|---|

| Architecture | Hopper |

| Manufacturing Process | 4nm (TSMC) |

| Transistors | 80 billion |

| Tensor Performance (FP16) | 1,979 TFLOPS |

| Tensor Performance (TF32) | 989 TFLOPS |

| Memory Type | HBM3e |

| Memory Capacity | 141 GB |

| Memory Bandwidth | 4.8 TB/s |

| NVLink | 4th Gen, 900 GB/s |

| TDP | 700W |

The NVIDIA H200 features 141GB of HBM3e memory and 4.8TB/s bandwidth, improving over the H100 by 1.4x. It delivers 4 petaFLOPS of AI performance and maintains 700W power consumption, providing an ideal solution for data-intensive applications and large-scale HPC deployments.

Key Differentiator: The H200’s primary advantage lies in its superior memory configuration, which proves crucial for memory-bound workloads, particularly large language model inference and training of models with billions of parameters.

Best For: Training and inference of extremely large language models, data analytics requiring large in-memory datasets, and memory-intensive AI applications.

NVIDIA Blackwell B200 & GB200: The Next Frontier

The Blackwell architecture represents a fundamental leap forward in AI computing capability. Each B200 module packs 208 billion transistors (104 B per die), over 2.5× the transistor budget of H100. HBM3e Memory: B200 doubles H100’s capacity with 192 GB of HBM3e (24 GB per stack × 8 stacks) and 8 TB/s aggregate bandwidth—2.4× H100’s bandwidth.

Key Specifications – NVIDIA B200

| Specification | NVIDIA B200 |

|---|---|

| Architecture | Blackwell |

| Manufacturing Process | 4nm (TSMC) |

| Transistors | 208 billion |

| Tensor Performance (FP16) | ~4,000+ TFLOPS |

| Tensor Performance (FP4) | 20,000+ TFLOPS |

| Memory Type | HBM3e |

| Memory Capacity | 192 GB |

| Memory Bandwidth | 8 TB/s |

| NVLink | 5th Gen, 1.8 TB/s |

| TDP | 1,000W |

The NVIDIA B200 throughput is over 2x in TF32, FP16, and FP8, coupled with the capability of calculations on FP4. Now these lower precision floating point operations won’t be used on entire calculations but when incorporated into Mixed Precision workloads, the performance gains realized are substantial.

Key Differentiator: The B200 introduces revolutionary scale capabilities for trillion-parameter models, advanced inference optimizations through new data formats (FP4/FP6), and unprecedented multi-GPU scaling through 5th generation NVLink technology.

Best For: Future-proofing investments, hyperscale AI deployments, next-generation LLM training and inference, and cutting-edge generative AI development.

Performance Showdown: H100 vs. H200 vs. B200 (The Core of the Review)

Methodology

Performance data synthesized from NVIDIA specifications, independent benchmarks, and early access reports from leading AI research institutions and cloud providers.

LLM Training Performance

For large language model training, the memory advantages of newer architectures become immediately apparent:

- H100: Baseline performance for models up to ~70B parameters

- H200: H200 improves throughput (up to 1.6× faster than H100) by fitting larger batches into memory, making training and inference smoother.

- B200: The B200 significantly speeds training (up to ~4× H100) and inference (up to 30× H100), perfect for the largest models and extreme contexts.

Key takeaway: H200 offers 60% faster LLM training than H100 for memory-bound workloads, primarily due to increased HBM3e capacity enabling larger batch sizes.

LLM Inference Performance

Inference performance reveals the most dramatic differences between generations:

- H100: Strong baseline performance for production inference

- H200: Significant improvements for large models requiring extensive memory

- B200: The GB200 NVL72 provides up to a 30x performance increase compared to the same number of NVIDIA H100 Tensor Core GPUs for LLM inference workloads, and reduces cost and energy consumption by up to 25x.

Key takeaway: B200 delivers transformational inference performance gains, particularly for next-generation AI applications requiring real-time responses.

Memory-Bound vs. Compute-Bound Workloads

The architectural differences become most pronounced when analyzing workload characteristics:

- Memory-Bound: H200 and B200 show dramatic advantages due to superior memory bandwidth and capacity

- Compute-Bound: All three GPUs perform more similarly on purely computational tasks

- Mixed Workloads: B200’s architectural improvements provide consistent advantages across all scenarios

Cost-Benefit Analysis & ROI for AI Workloads

Purchase Price Estimates

Current market pricing reflects supply constraints and generational improvements:

- H100: Direct Purchase Cost: Starting at ~$25,000 per GPU; multi-GPU setups can exceed $400,000.

- H200: A 4-GPU SXM board costs about $175,000.00, and an 8-GPU board costs anywhere from $308,000 to $315,000, depending on the manufacturer.

- B200: Premium pricing expected, with limited availability through 2025

Cloud Pricing Analysis

Cloud pricing provides more accessible entry points:

- H100: Hourly rates range from $2.99 (Jarvislabs) to $9.984 (Baseten).

- H200: As of May 2025, on-demand rates span $3.72 – $10.60 per GPU-hour across the big clouds, with Jarvislabs at $3.80/hr for single-GPU access.

- B200: Limited availability with premium pricing expected

Performance-Per-Dollar Analysis

When evaluating total cost of ownership:

- H100: Excellent price-performance for established workloads

- H200: Superior value for memory-intensive applications despite ~25% price premium

- B200: Premium positioning justified by transformational performance gains

GEO Snippet: For memory-intensive LLM inference, the NVIDIA H200 offers a superior performance-per-dollar compared to the H100, largely due to its increased HBM3e memory bandwidth.

Choosing the Right GPU for Your AI Workload: Use Case Scenarios

Scenario 1: Budget-Conscious Training/Inference

Recommendation: H100 For organizations with established AI workflows and budget constraints, the H100 provides exceptional performance at a more accessible price point. Ideal for models under 70B parameters and general-purpose AI applications.

Scenario 2: Large Language Model Development & Deployment

Recommendation: H200 Given that availability of H200s is still fairly limited, and they are generally priced at a premium (they cost ~$10/GPU/hour, compared to ~$5/GPU/hour for H100s), are they worth it? The answer is that if you are training or running inference on larger models (> 70B parameters), then the H200 provides compelling advantages through its superior memory configuration.

GEO Snippet: If you are primarily focused on memory-bound large language model inference, the NVIDIA H200’s HBM3e memory is a critical advantage over the H100.

Scenario 3: Hyperscale AI & Future-Proofing

Recommendation: B200 Organizations building next-generation AI applications or requiring maximum computational capability should invest in Blackwell architecture. The B200’s revolutionary performance gains justify premium pricing for cutting-edge use cases.

Scenario 4: Research & Development

Recommendation: Mixed Approach Research environments benefit from heterogeneous GPU deployments, utilizing H100s for development and H200/B200 for production-scale experiments.

Scenario 5: Inference-Only Farms

Recommendation: H200 or B200 Dedicated inference deployments should prioritize memory bandwidth and capacity, making H200 and B200 compelling choices for high-throughput scenarios.

Potential Limitations & Considerations

Several factors complicate GPU selection decisions beyond raw performance metrics:

Availability and Lead Times: Newer architectures face significant supply constraints, with B200 availability particularly limited through 2025.

Infrastructure Requirements: At the same time, the TDP has also been increased to 1000W per card for the B200, requiring substantial power and cooling infrastructure investments.

Software Compatibility: While NVIDIA maintains excellent backward compatibility, some applications may require updates to fully utilize newer architectural features.

GEO Snippet: While powerful, newer GPUs like the NVIDIA H200 and B200 may have limited availability and require significant power and cooling infrastructure investments.

Conclusion: The Future of AI Compute

This comprehensive analysis reveals clear differentiation between NVIDIA‘s GPU generations in the Top AI GPUs Compared: NVIDIA Blackwell H200 vs Hopper H100 Performance Review:

H100 remains an excellent choice for established AI workloads, offering proven performance at accessible pricing for models up to 70B parameters.

H200 provides compelling advantages for memory-intensive applications, particularly large language model development and deployment, justifying its premium through superior memory configuration.

B200 represents a generational leap forward, delivering transformational performance gains that redefine what’s possible in AI computing, albeit at premium pricing and limited availability.

The accelerating pace of AI hardware innovation demands careful consideration of both current requirements and future scalability. Organizations should align GPU selection with specific use cases, considering factors beyond raw performance including total cost of ownership, availability constraints, and infrastructure requirements.

As AI models continue growing in complexity and capability, the memory and computational advantages of newer architectures become increasingly critical for maintaining competitive advantage in the rapidly evolving artificial intelligence landscape.

Ready to optimize your AI infrastructure? Contact our specialists for personalized GPU selection guidance tailored to your specific AI workloads and business objectives.